We care deeply about privacy. We also know that trust is built by transparency. This blog, and the technical paper reference within, is an example of that commitment: we describe an important new Android privacy infrastructure called Private Compute Core (PCC).

Some of our most exciting machine learning features use continuous sensing data — information from the microphone, camera, and screen. These features keep you safe, help you communicate, and facilitate stronger connections with people you care about. To unlock this new generation of innovative concepts, we built a specialized sandbox to privately process and protect this data.

Android Private Compute Core

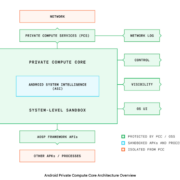

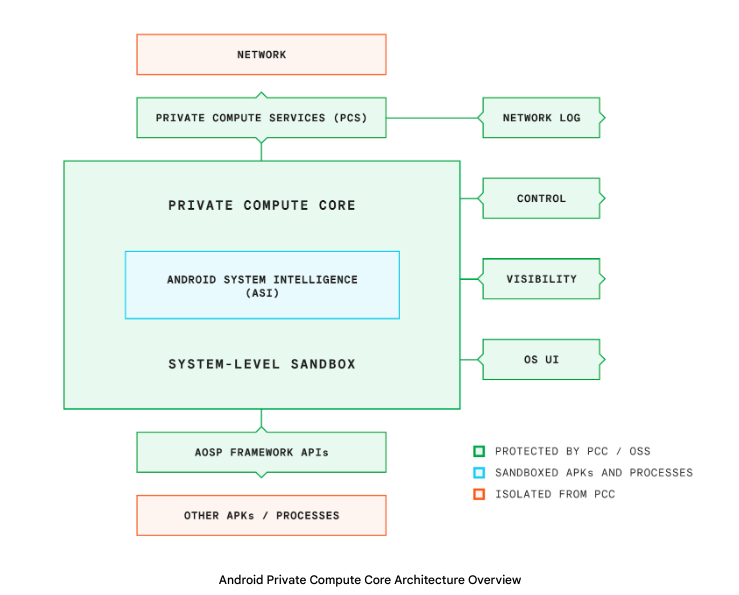

PCC is a secure, isolated data processing environment inside of the Android operating system that gives you control of the data inside, such as deciding if, how, and when it is shared with others. This way, PCC can enable features like Live Translate without sharing continuous sensing data with service providers, including Google.

PCC is part of Protected Computing, a toolkit of technologies that transform how, when, and where data is processed to technically ensure its privacy and safety. For example, by employing cloud enclaves, edge processing, or end-to-end encryption we ensure sensitive data remains in exclusive control of the user.

How Private Compute Core works

PCC is designed to enable innovative features while keeping the data needed for them confidential from other subsystems. We do this by using techniques such as limiting Interprocess Communications (IPC) binds and using isolated processes. These are included as part of the Android Open Source Project and controlled by publicly available surfaces, such as Android framework APIs. For features that run inside PCC, continuous sensing data is processed safely and seamlessly while keeping it confidential.

To stay useful, any machine learning feature has to get better over time. To keep the models that power PCC features up to date, while still keeping the data private, we leverage federated learning and analytics. Network calls to improve the performance of these models can be monitored using Private Compute Services.

Let us show you our work

The publicly-verifiable architectures in PCC demonstrate how we strive to deliver confidentiality and control, and do it in a way that is verifiable and visible to users. In addition to this blog, we provide this transparency through public documentation and open-source code — we hope you’ll have a look below.

To explain in even more detail, we’ve published a technical whitepaper for researchers and interested members of the community. In it, we describe data protections in-depth, the processes and mechanisms we’ve built, and include diagrams of the privacy structures for continuous sensing features.

Private Compute Services was recently open-sourced as well, and we invite our Android community to inspect the code that controls the data management and egress policies. We hope you’ll examine and report back on PCC’s implementation, so that our own documentation is not the only source of analysis.

Our commitment to transparency

Being transparent and engaged with users, developers, researchers, and technologists around the world is part of what makes Android special and, we think, more trustworthy. The paradigm of distributed trust, where credibility is built up from verification by multiple trusted sources, continues to extend this core value. Open sourcing the mechanisms for data protection and processes is one step towards making privacy verifiable. The next step is verification by the community — and we hope you’ll join in.

We’ll continue sharing our progress and look forward to hearing feedback from our users and community on the evolution of Private Compute Core and data privacy at Google.